Global Summit Calls for Working Together for AI Safety

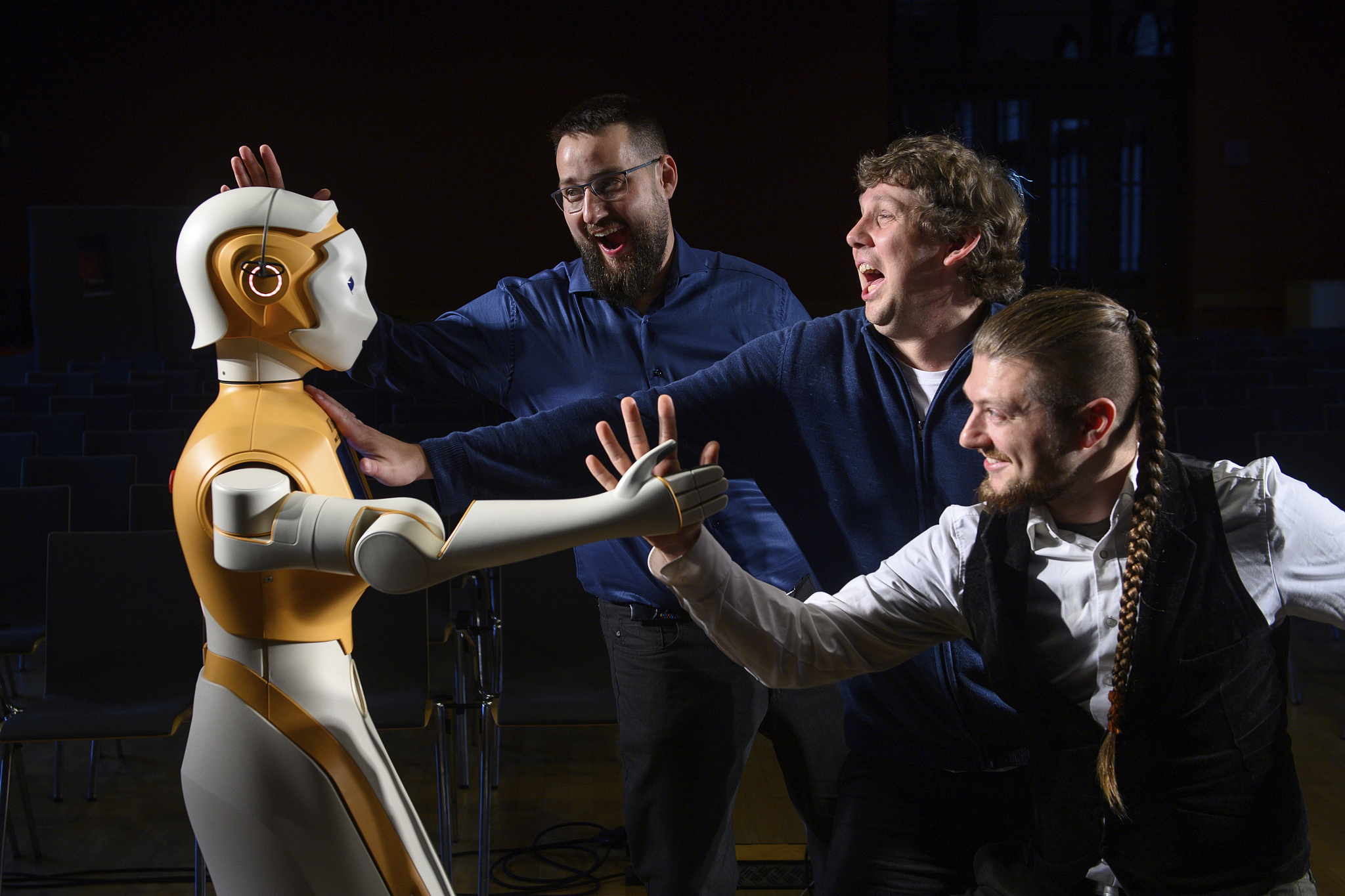

Matthias Busch (L), Ingo Siegert (C) and Dominykas Strazdas from the Institute of Information and Communication Technology, Department of Mobile Dialog Systems at Germany 's Otto von Guericke University, shake hands with the humanoid robot "Ari" on October 26,? 2023. (PHOTO: VCG)

Edited by TANG Zhexiao

How to deal with the risks?from?rapidly-developing?artificial intelligence (AI)?has become a?priority worldwide?since?ChatGPT,?an AI-powered language model that can create human-like texts released?to the public last year.

AI Safety Summit 2023, the global meeting convened by the UK?in Bletchley?from?November 1-2, brought together?politicians, AI company representatives and experts to discuss the global future of AI and work toward a shared understanding of its risks.

Sifted, a media site on European start-ups, said the summit was "broadly hailed as a diplomatic success because it managed to bring together senior Chinese and U.S. officials around the same table, and was bolstered by the participation of the European Commission president Ursula von der Leyen and even tech entrepreneur Elon Musk."

Global AI statement inked

At the summit, 28 participating countries and the European Union signed the Bletchley Declaration, acknowledging that AI “should be designed, developed, deployed and used in a manner that is safe, in such a way as to be human-centric, trustworthy and responsible” and agreeing to work together to ensure that.

According to the UK government, the declaration aims to identify AI?safety risks of shared concern, and build risk-based policies across concerned countries to ensure safety in light of such risks respectively.

The declaration noted that particular safety risks arise at the "frontier" of?AI — the highly capable general-purpose?AI?models, including foundation models, that could perform a wide variety of tasks - as well as relevant specific narrow?AI?that could exhibit capabilities that cause harm, matching or exceeding the capabilities of today’s most advanced models.?

UK science minister Michelle Donelan?said?the declaration was “a landmark achievement”?and laid?the foundations for discussions?of the summit.

However, experts think the declaration is not comprehensive enough. Paul Teather, CEO of AI-enabled research firm AMPLYFI, told Euronews Next that bringing major powers together to endorse ethical principles can be viewed as a success, but the undertaking to produce concrete policies and accountability mechanisms must follow swiftly.?

Countries?moving at their own pace

Participants in Bletchley reported their progress in AI governance?and supervision.

UK officials have made it clear that they do not think regulation is needed, or is even possible at this stage given how fast the industry is moving, The Guardian reported.

French economy and finance minister?Bruno Le Maire?emphasized that Europe must innovate before regulating.

The French government fought hard for open source, software which alllows users to? ?develop, modify and distribute the model.?“We shouldn’t discard open source upfront,” said?Jean No?l Barrot, France’s junior minister in charge of digital issues,?Sifted reported.?“What we’ve seen in previous generations of technologies is that open source has been very useful both for transparency and democratic governance of these technologies.”

The U.S. will launch an AI safety institute to evaluate known and emerging risks of the so-called frontier of?AI models, U.S. Secretary of Commerce Gina Raimondo said on November 1.

China ready to enhance AI safety with all sides

The Chinese delegation at the summit emphasized the need for international cooperation on AI safety and governance issues, urging increased representation of developing countries in global AI governance.

According to Wu Zhaohui, China's vice minister of science and technology, China was willing to "enhance dialogue and communication in AI safety with all sides."

American media CNBC reported? Donelan as?saying that?it is a "massive"?gesture that Chinese government officials chose to attend the U.K. AI summit”.

Elon Musk, CEO of?Tesla and SpaceX,?hailed UK Prime Minister Rishi Sunak’s "essential" decision to invite China , said?Politico Europe. “Having them here is essential," Musk reportedly said, "If they’re not participants, it’s pointless."

“There’s no safety without China,”?according to?Sifted, “China was a key participant at the summit, given its role in developing AI. Its involvement in the summit was described as constructive.”

European?Commission vice president for values and transparency Věra Jourová, who visited Beijing?in September to hold talks on AI and international data flows, said: “I think it was important that they were here, also that they heard our determination to work together, and honestly, for the really big global catastrophic risks, we need to have China on board.”

China launched?the Global Artificial Intelligence Governance Initiative on October 18, presenting a constructive approach to addressing universal concerns over AI development and governance.?